As a customer's network expands with additional Red Hat OpenShift clusters, it can become increasingly difficult to manage the lifecycle of each cluster and keep track of applied configurations. Furthermore, performing manual Day 2 operations can be quite challenging. Utilizing a GitOps approach with Red Hat Advanced Cluster Management for Kubernetes can effectively deal with the challenge of managing remote clusters by ensuring Git is the single source of truth for applied configurations to each cluster.

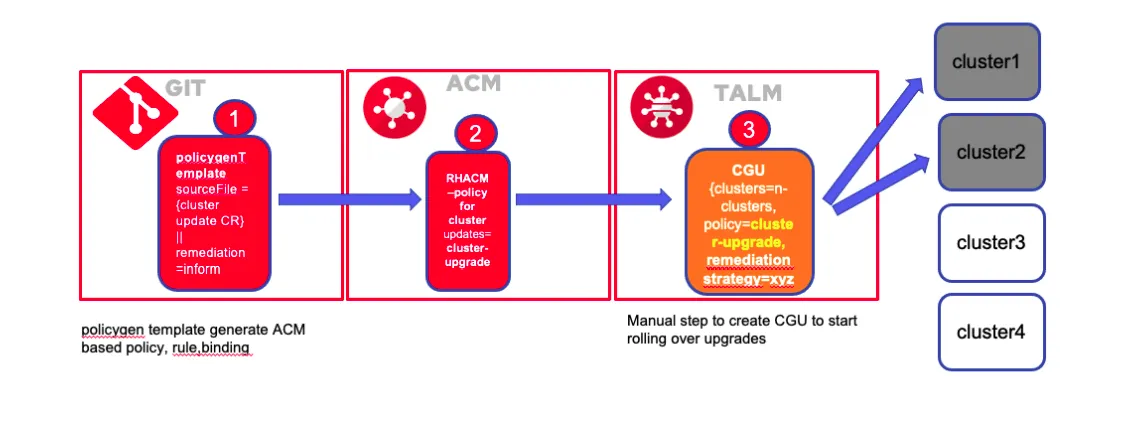

In this article, I’ll describe how to create the complete workflow of upgrading managed clusters using the GitOps method. To briefly explain the workflow, the users fill in the upgrade required parameters in a form written to a YAML/JSON object and commit/push it to Git. Argo CD synchronizes these objects into the Red Hat Advanced Cluster Management cluster. Red Hat Advanced Cluster Management triggers the upgrade after applying the policies to the managed cluster through the Topology Aware Life Cycle (TALM) operator using the ClusterGroupUpgrade (CGU) object.

Cooking recipe

Upgrading a cluster involves the user updating a form through a front-end web page containing the required parameters. This web page will insert the inputs to a Jinja template, editing the Git repo. Argo CD continuously monitors the Git repository and detects any changes made to it. When it finds changes, it pulls them, converts the PGT into policies, and pushes them to Red Hat Advanced Cluster Management.

Upon receiving the request from Argo CD, Red Hat Advanced Cluster Management prepares the appropriate policies that need to be applied to the managed clusters in order to upgrade them. As an extra precaution, Red Hat Advanced Cluster Management waits for the green light from the TALM operator before actually starting the upgrade. TALM provides the green light by creating a Cluster Group Upgrade (CGU) request. Once the CGU is created, Red Hat Advanced Cluster Management begins the upgrade process on all the managed clusters identified in the CGU.

Prerequisites

The described workflow assumes there are two ready clusters. The first one is the managed cluster. The second cluster is the HUB, which contains Red Hat Advanced Cluster Management, multiclusterhub, Argo CD (Openshift GitOps operator), and TALM operators.

Objects and parameters

The Git source will contain the PolicyGenTemplate as shown below:

apiVersion: ran.openshift.io/v1

kind: PolicyGenTemplate

metadata:

name: "ocp1-upgrade"

namespace: "ztp-ocp1-upgrade"

spec:

bindingRules:

name: "ocp1" #refer to the applied label on the managed cluster

remediationAction: inform

sourceFiles:

- fileName: ClusterVersion.yaml

policyName: "platform-upgrade-prep"

metadata:

name: version

annotations:

ran.openshift.io/ztp-deploy-wave: "1"

spec:

channel: "stable-4.13" #desired chanel on the managed cluster

- fileName: ClusterVersion.yaml

policyName: "platform-upgrade"

metadata:

name: version

spec:

channel: "stable-4.13" #desired chanel on the managed cluster

desiredUpdate:

version: 4.13.12 #desired version on the managed cluster

force: true

Before committing the PolicyGenTemplate, it is worth validating it. A simple way to do that is to use the ztp-site-generator container. Place the policygentemplate (along with kustomization.yaml) in the ~/ztp_policyget/policygentemplate directory, as in the following structure:

$ tree . ├── kustomization.yaml ├── ns.yaml └── pgt.yaml

Generate CRs from this policygentemplate file, using the ztp-site-generator container:

$ podman run --rm --log-driver=none -v ./ztp_policygen/policygentemplates:/resources:Z,U ztp-site-generator:latest generator config .

If the policygentemplates file is valid, then the CRs generated is in the ~/ztp_policygen/policygentemplates/out/generated_installCRs directory:

$ tree . ├── kustomization.yaml ├── ns.yaml ├── out │ └── generated_configCRs │ └── ocp1-upgrade │ ├── ocp1-upgrade-placementbinding.yaml │ ├── ocp1-upgrade-placementrules.yaml │ ├── ocp1-upgrade-platform-upgrade-prep.yaml │ └── ocp1-upgrade-platform-upgrade.yaml └── pgt.yaml

Preparing the GitOps ZTP site configuration repository

Before using the ZTP GitOps pipeline, you must configure the hub cluster with Argo CD:

1. Pull the ztp-site-generate image:

$ podman pull registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.13

2. Create a directory and export the Argo CD content there:

$ mkdir -p ./out $ podman run --log-driver=none --rm registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.13 extract /home/ztp --tar | tar x -C ./out

3. Modify the two Argo CD applications, out/argocd/deployment/clusters-app.yaml and out/argocd/deployment/policies-app.yaml, based on the Git repository. Where the URL is pointing to the Git repository, targetRevision indicates which Git repository branch to monitor, and path specifies the path to the SiteConfig and PolicyGenTemplate CRs, respectively.

4. Install the ZTP GitOps plugin by patching the Argo CD instance in the hub cluster using the patch file previously extracted into the out/argocd/deployment/ directory. Run the following command:

$ oc patch argocd openshift-gitops -n openshift-gitops --type=merge --patch-file out/argocd/deployment/argocd-openshift-gitops-patch.json

5. Apply the pipeline configuration to the hub cluster by using the following command:

$ oc apply -k out/argocd/deployment

Build the pipeline

All the preparations are complete, so we're ready to build the GitOps pipeline.

1. Move to the Argo CD UI to link the repository. Click Connect Repo using the HTTPS button from the settings and enter the repository URL, credentials, and the cert, if any.

2. Create the application CRD to map the Git source to the Red Hat Advanced Cluster Management cluster, which is the local cluster in the current case. Click on the New App button from the main page, then specify the application name and project name, and mark Auto-Create Namespace from the sync options. Scroll down and choose the target repository that was created in the previous step. Finally, specify the target cluster from the drop-down list which points to the Red Hat Advanced Cluster Management.

Commit and upgrade

Currently, Argo CD is monitoring the Git repository and can map any changes to the Red Hat Advanced Cluster Management cluster's destination. After the commit, Argo CD detects the difference and pulls the PolicyGenTemplate. Thanks to the GitOps ZTP plugin, the placementrules, placementbinding, and required policies are generated.

The PolicyGenTemplate which is placed on the Git as source file is used by Red Hat Advanced Cluster Management to update the cluster policies. The cluster upgrade is performed by Red Hat Advanced Cluster Management after applying the created policies to the managed cluster through the TALM operator using the ClusterGroupUpgrade (CGU) object.

Right now, the policies are shown on Red Hat Advanced Cluster Management , but it still displays a Not-compliant status because the TALM operator is not configured yet. The ClusterGroupUpgrade is the last piece of the process that the TALM will use to link the policies to the managed cluster. The below YAML needs to be applied to create the CGU. In the current case, the managed cluster is labeled with name=ocp1.

apiVersion: ran.openshift.io/v1alpha1

kind: ClusterGroupUpgrade

metadata:

name: clustergroupupgradeocp1

namespace: ztp-install

spec:

backup: false

enable: true

managedPolicies:

- ocp1-upgrade-platform-upgrade-prep #policies created in PGT

- ocp1-upgrade-platform-upgrade

remediationStrategy:

timeout: 240

maxConcurrency: 1

clusterSelector:

- name=ocp1

preCaching: false

actions:

afterCompletion:

deleteObjects: true

Validate and verify

The upgrade should now be progressing. There are several ways to check the status: through the oc CLI as in the following command:

$ oc adm upgrade

To see the current version:

$ oc get clusterversion

Or you can use the API calls, as shown below:

$ curl -sk --header "Authorization: Bearer $TOKEN" -X GET https://api.xx.xx:6443/apis/config.openshift.io/v1/clusterversions/version | jq '.status.conditions'

What's next

The next article will tackle the Canary upgrades method, where a subset of clusters can be upgraded to ensure that the update/upgrade does not break existing workloads. Once that is confirmed, the update can be pushed to all clusters.

Resources

About the author

Zeid is a cloud consultant with a passion for technology and history. He joined Red Hat in May 2020 and has over 10 years of experience in the IT industry, specializing in cloud migration, infrastructure optimization, and DevOps practices. In his free time, Zeid enjoys exploring history, keeping up with technological advancements, and spending time with his loved ones.

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds