In today's economy, the expectations placed on your Infrastructure-as-a-Service (IaaS) platform are higher than ever. Virtualization users not only need to optimize their existing resources to combat rising operational expenditures, they also demand a robust, performant and adaptable platform capable of handling next-generation workloads such as AI models that require hardware acceleration platform optimization. Red Hat OpenStack Services on OpenShift continues to evolve to meet these critical IaaS demands. This latest release introduces powerful capabilities designed to enhance workload management, improve resource utilization and streamline operations, empowering virtualization teams to navigate the current landscape with greater efficiency, control and the reliable IaaS foundation they require for even the most demanding applications.

To help you navigate evolving change and increasing costs, the latest Red Hat OpenStack Services on OpenShift release introduces some helpful updates in key areas, including intelligent workload optimization to enhance resource management, an AI-optimized infrastructure platform ready for next-generation applications and new security updates, all without the need to reboot or interrupt runtime. Ready to dig into what these new features can do for you? Let's take a look!

Dynamic resource optimization

In the Red Hat OpenStack Services on OpenShift, feature release 2 ( March 19, 2025), Red Hat introduced the workload optimization operator in tech preview.

Based on the upstream OpenStack Watcher project, the new operator enables the cloud administrator Day 2 capabilities to manage workloads running on the OpenStack cloud infrastructure. They can:

- Optimize workload distribution by reducing infrastructure hotspots caused by running workload imbalanced after creation.

- Reduce operational costs by balancing the workload to enable better infrastructure resource usage, which can result in:

- Lower hardware requirements.

- Reduced utilities (power, cooling, etc).

- Fewer hotspots and congestions (fewer service outages and scrambling engineers).

- Enhance performance by providing a balanced infrastructure that can handle more workloads, meet more demands and support business growth.

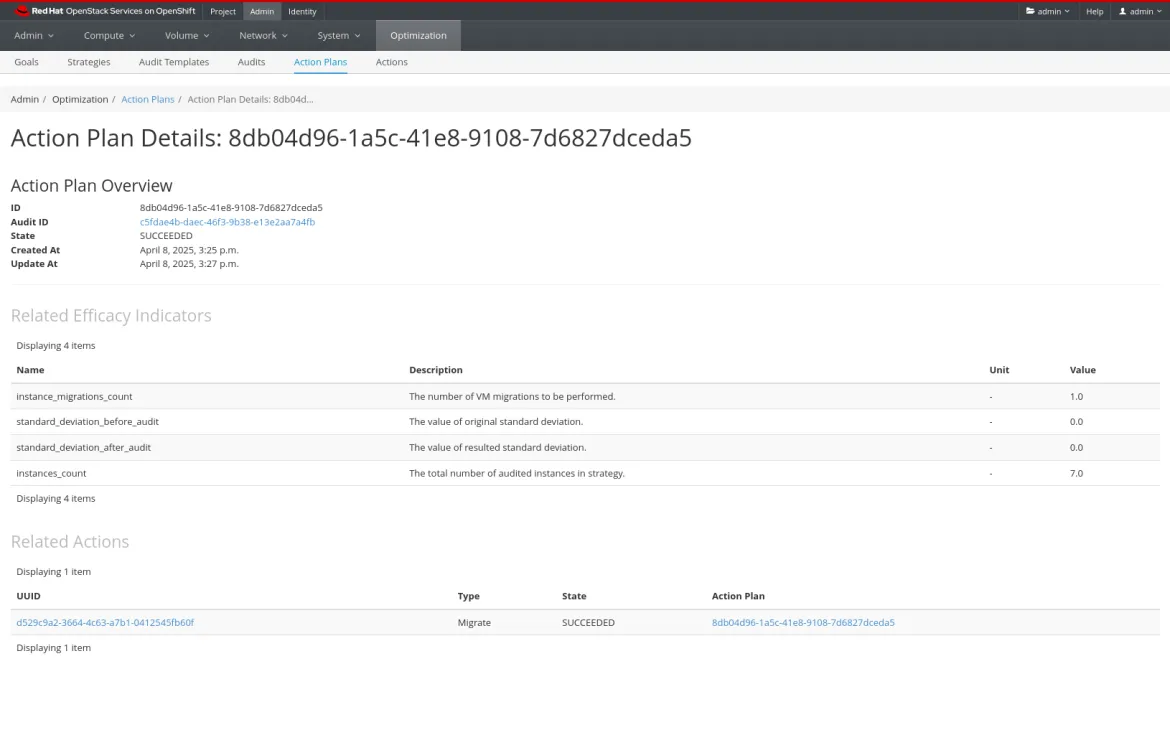

The image shows the execution of Watcher’s Dynamic Resource Optimization Action Plan, in Red Hat OpenStack Services on the Red Hat OpenShift console.

For more information, please visit our latest release notes.

AI-optimized infrastructure platform

OpenStack Services on OpenShift is your AI-optimized infrastructure platform, built for multi-tenant, enterprise-scale generative AI with support for NVIDIA and AMD GPU accelerators. Accelerator virtualization enables live migration of stateful applications in VMs, secure multi-tenant sharing, and in-VM AI microservices. This empowers you to launch and run models anywhere with full control and portability.

- Delivering robust, end-to-end integration with NVIDIA GPUs, enables advanced capabilities such as :

- NVIDIA vGPU live migration.

- NVIDIA NIM microservices in the RHOSO virtual machine.

- Accelerated and optimized deployment of AI models like Gen AI.

- Portability and control, enabling model deployment across various infrastructures.

- Documentation on MIG-backed vGPU support for inference and fine-grained resource allocation.

- Support for AMD Instinct GPUs includes:

- AMD GPU enablement with ROCm GIM drivers.

- Documentation for SR-IOV assignment PCIe Passthrough VF for AMD MI210 GPU enablement now available as a tech preview in the feature release 2.

- Planned SR-IOV support for AMD GPU partitioning that will let you slice a massive 192 GB-HBM3 AMD MI300X accelerator into multiple, right-sized virtual GPUs so teams can share the same card, fit models that would otherwise spill over smaller GPUs and boost higher utilization and cost-efficiency across the fleet.

- Other features for GPUs and AI accelerators that are now available with feature release 2 include:

- Support for AMD Instinct GPUs includes:

- VFIO SR-IOV vGPU for AI support, enabling high-performance networking and GPU passthrough in virtualized environments.

- GPU metrics capturing, enabling users to capture GPU metrics with Kepler for Power monitoring in the OpenShift console.

- Taking advantage of OpenStack Placement's unified-limits quota model, administrators gain precise control over resource allocation per project, now extending to specialized hardware like vGPUs and future AMD GPU partitions. This granular control enables accurate metering, strategic oversubscription and comprehensive auditing of GPU memory bandwidth and instance counts, effectively preventing resource contention between tenants while optimizing the utilization of valuable accelerator resources across the OpenStack cloud.

Gen AI-based virtual assistant

Red Hat Lightspeed for Red Hat OpenStack Services on OpenShift is introduced in feature release 2, enabling users to:

- Take advantage of their Red Hat OpenShift platform expertise to run the OpenStack clouds and services they need, while also adding additional Red Hat OpenShift operators that enhance their OpenStack experience.

- Streamline issue resolution for cloud operators to enhance the Red Hat OpenShift experience by extending Red Hat OpenShift Lightspeed, its gen AI-based virtual assistant, to include OpenStack information. Through a user-friendly, natural language interface, Red Hat OpenStack Services on OpenShift enables users to efficiently ask Red Hat Lightspeed questions about RHOSO usage and administration, receiving valuable support for troubleshooting and shortening the time for resolution.

The image shows a user interacting with OpenStack Lightspeed within the Red Hat OpenShift web console.

Security

Introducing kernel live patching for Red Hat OpenStack Services on OpenShift environments.

- Starting with feature release 2, users can test a technology preview of kernel live patching support for compute nodes. With this feature, users can apply critical security patches in memory to a running Linux kernel without needing to reboot or interrupt the runtime. This means you no longer need to drain the clusters and migrate the workload when applying CVE patches that require kernel updates, resulting in fewer maintenance windows.

Ready to level up your virtualization platform?

The latest advancements in Red Hat OpenStack Services on OpenShift empower virtualization users to optimize their infrastructure, embrace next-generation workloads and maintain a security-focused environment to support today's dynamic landscape. From intelligent workload balancing to enhanced AI capabilities and crucial security updates, such as live kernel patching, Red Hat OpenStack Services on OpenShift continues to deliver the tools you need to meet increasing platform needs. Read the latest product documentation to discover how Red Hat can help you build a more efficient, robust and resilient IaaS virtualization platform.

product trial

Red Hat OpenStack Services on OpenShift | Product Trial

About the author

Sean Cohen is the Director of Product Management in the Hybrid Platforms organization at Red Hat, a leading provider of open-source technologies and hybrid cloud solutions. He oversees the infrastructure and observability business, including strategy and delivery for Red Hat OpenShift and OpenStack Platforms. With over 15 years of experience, Sean has a robust background in senior product management and delivery across enterprise and telco markets, driving cloud infrastructure strategy, product lifecycle management, and leading high-performance cross-functional teams.

More like this

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds