This article details Red Hat's engineering efforts to support running a single-instance Oracle Database 19c on Red Hat OpenShift Virtualization. It provides a comprehensive reference architecture, validation results covering functionality, performance, scalability, and live migration, along with links to testing artifacts hosted on GitHub.

We will demonstrate that OpenShift Virtualization offers robust performance for demanding production workloads, such as Oracle databases, providing a viable virtualization alternative without sacrificing performance. This is especially for technology leaders, architects, engineering teams, and project managers involved in evaluating and adopting single-instance Oracle Database on OpenShift Virtualization.

The architecture design principles focus on resource allocation, partitioning, and abstraction layer optimization for compute, network, and storage. Performance tests using HammerDB with the TPC-C benchmark prove that Oracle Database can successfully run on OpenShift Virtualization with local NVMe storage outperforming Red Hat OpenShift Data Foundation. This article will also highlight observability and monitoring, using Prometheus and Grafana for infrastructure and Oracle-specific insights.

Background

Many customers are seeking virtualization alternatives without sacrificing performance. OpenShift Virtualization provides robust performance for demanding production workloads, including enterprise databases.

One of the most common components in traditional software architecture is the Oracle Database. To support customers interested in evaluating and adopting Oracle Database on OpenShift Virtualization, Red Hat has dedicated engineering resources to provide an optimized experience operating Oracle Database on OpenShift Virtualization.

This article assumes readers have an understanding of Red Hat OpenShift Container Platform. We do not intend to discuss the generic architecture of the Oracle Database, nor performance tuning. Instead, we will explain the architecture options for setting up and configuring OpenShift Virtualization to enable Oracle Database to achieve the best performance.

This post is intended for the following professionals involved in evaluating, validating, and deciding on the adoption of Oracle Single Instance Database on OpenShift Virtualization:

- Technology leaders (e.g., VPs, CTOs): Stakeholders who are responsible to optimize ROI (Return on investment) and TCO (Total cost of ownership) of the day-to-day operations of running Oracle Database workloads in hybrid or on-premises cloud scenarios.

- Architects: Customer architects can review the reference architecture and test results to assess whether OpenShift Virtualization is a viable platform for hosting Oracle Database workloads in their organization. This article provides architectural requirements and enables architects to run independent validations.

- Engineering teams: Engineering teams can leverage the performance tests used by Red Hat during this evaluation, along with reusable artifacts available on GitHub, to accelerate their test setup and automation, streamlining the validation process.

- Project managers: Project managers can use the reference architectures to identify affected components and responsible teams. They can also use the standardized testing.

OpenShift Virtualization architecture overview

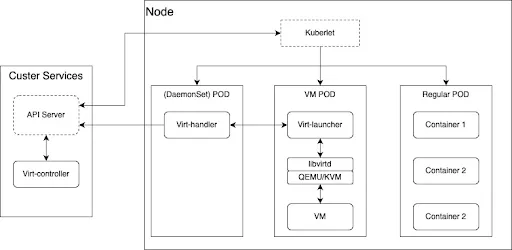

OpenShift Virtualization is the Red Hat implementation of the open source KubeVirt project. It is built on top of the standard OpenShift platform. A virtual machine (VM) runs within a containerized pod, OpenShift Container Platform manages VMs the same as it manages any pod, where a VM instance has access to the same platform services, including security, network, and storage like a regular container application. The only difference is the VM is managed directly at pod level unlike regular workload applications running inside containers.

Architecture components:

- Kernel-based virtual machine (KVM): The VM hypervisor on OpenShift is part of the Linux kernel.

- Virtual machine instance (VMI): Each VM represented by a VMI is created by QEMU using KVM to emulate hardware, QEMU creates user space level isolation.

- KubeVirt: Kubernetes add-on to manage VMs as Kubernetes resources, so that VMs will look like a pod.

virt-operator: Manages KubeVirt components installation and updates.virt-controller: Handles VM lifecycle management (e.g., restart on failure, scaling).virt-handler: A daemon on a KubeVirt enabled node to manage VMs on hosts using KVM/QEMU.virt-launcher: One per VM Pod, acts as the orchestrator that manages the QEMU/KVM virtual machine process inside the pod.- Custom Resources (CRs): Represents a VM definition, running a VM instance, and scheduling/policies.

- Pod wrapper: Serves as a wrapper for the QEMU process. VMI runs inside the Pod as a virtualized guest OS.

- Storage: OpenShift Virtualization supports a variety of storage solutions, including a variety of Kubernetes native options such as OpenShift Data Foundation, Portworx, and more traditional enterprise solutions such as iSCSI, Fibre Channel (FC) SAN storage, and others. The Kubernetes native storage solution, OpenShift Data Foundation, built on the open source Ceph project, delivers scalable, redundant storage with an abstraction layer optimized for Kubernetes environments. OpenShift Data Foundation also supports dynamic provisioning of persistent volumes (PVs) and persistent volume claims (PVCs), simplifying storage management.

For this Oracle Database validation project, we will consider multiple storage alternatives. However, OpenShift Data Foundation will be the primary focus within the scope of this document due to its seamless integration with Kubernetes. When deploying Oracle Database workloads, it is important to evaluate and select the storage solution that best meets your performance requirements and operational needs.

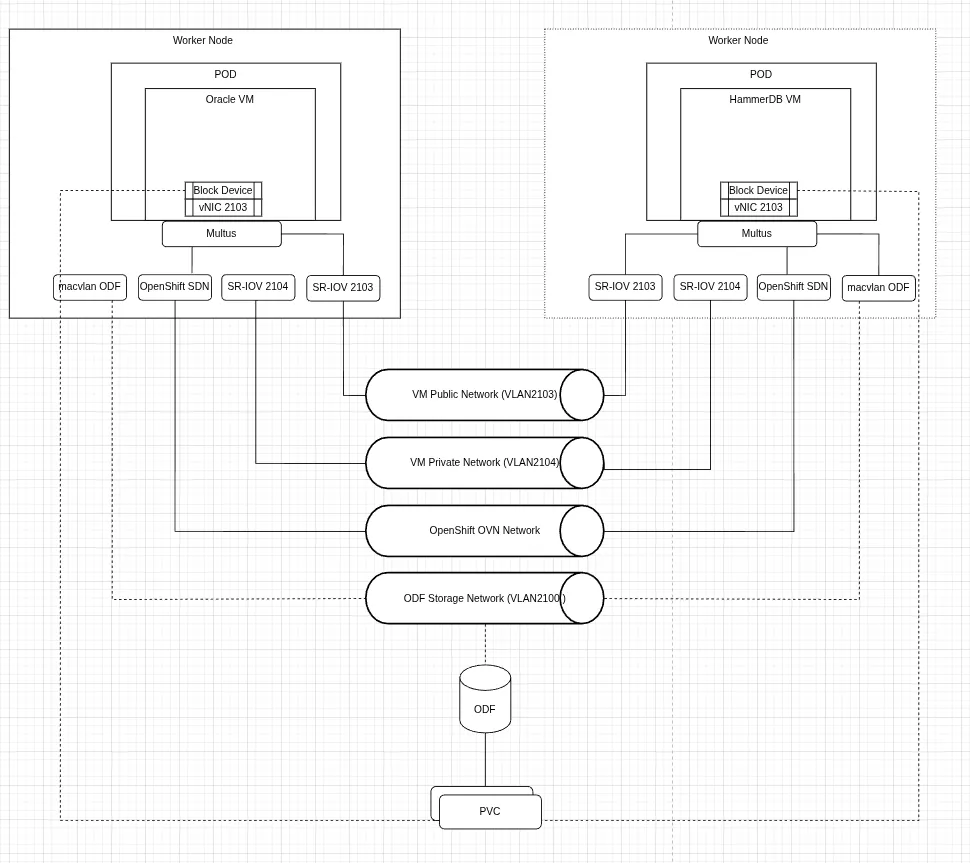

Network: VMs access network via Multus (CNI meta-plugin) or Single Root I/O Virtualization (SR-IOV), where Multus is defined at the pod level.

Oracle Database design principles

When an Oracle Database runs on a virtualized operating system, the VM is responsible for ensuring the database receives adequate system resources to operate efficiently and remain resilient. Since real-world infrastructure resources are limited, the infrastructure architecture must be carefully designed to balance resource allocation and accommodate the varying demands of different workloads.

A common architectural approach to boost Oracle Database performance at the infrastructure level includes the following principles:

- Resource location: Allocate sufficient resources in terms of compute, storage and network to eliminate bottlenecks.

- Resource partitioning: When resources are limited, partition resource requirements and implement tailored solutions to meet specific needs.

- Abstraction layer optimization: Avoid unnecessary or low-value abstraction layers in exchange flexibility for performance gains.

Oracle Database relies heavily on three primary types of system resources:

- Compute: This includes vCPUs, IOThreads, memory, and the ability to scale across nodes.

- Network: Oracle Database is highly sensitive to I/O performance. Client access and storage access have distinct throughput and latency requirements. As a result, Oracle Database architectures often use separate networks for different types of traffic.

- Storage: Redo logs, database tables, and backups have different read/write performance needs. Whenever possible, you should place these on separate physical storage to ensure optimal I/O performance.

OpenShift Virtualization offers the capabilities and flexibility needed to support various approaches for resource allocation based on system resource partitioning needs.

Reference architecture

This section discusses architecture considerations and solution options in Oracle Database on OpenShift Virtualization design.

Compute

Ensure Oracle Database has sufficient computation resources, that OpenShift Virtualization platform provides direct control over:

- Configuring the vCPU and RAM allocation for resource vertical scaling.

- OpenShift Virtualization cluster extensibility for horizontal scalability.

- Control of VM IO Thread count allocation to eliminate pod level I/O bottleneck.

- Avoid overcommitting resources for virtual machines hosting Oracle Database workloads allocating more virtualized CPUs or memory than there are physical resources on the system.

Network

Oracle Database traffic has different performance requirements in terms of network latency, throughput, and reliability. OpenShift Container Platform pod Multus is a capability to partition network traffic and mediate multiple network protocols. Consider the following:

- Implementing different network paths for OpenShift SDN, storage, and virtual machines.

- For Oracle RAC Database installations, further segregate network traffic for RAC instance-to-instance interconnect communication, and “public” network communication.

- For mission critical workloads sensitive to latency and throughput, consider leveraging SR-IOV for virtual network interfaces creating a direct path from VM to underlying physical resources.

Storage

As previously mentioned, OpenShift Virtualization supports a wide range of storage solutions, from Kubernetes-native options like OpenShift Data Foundation and Portworx to traditional enterprise systems such as iSCSI and Fibre Channel (FC) SAN. This flexibility allows users to choose storage that best fits their performance and operational needs.

While there is no universal rule in selecting appropriate storage option, the following principles could be used as guidelines:

- Balance between the need for operational flexibility (ease of provisioning, integration with platform) and performance (IO latency, throughput) requirements.

- Support for multi-write option (shared volume between two or more VMs) that may be required for Oracle RAC Database.

Hardware configuration

The design of the initial performance tests has been scoped to a set of hardware resources available today.

Cluster specification:

- 4 x Dell R660 servers

- 128 CPU threads (2 sockets of Intel Xeon Gold 6430 )

- 256 GB memory

- 1TB root disk

- 4x 1.5 TB NVME drives

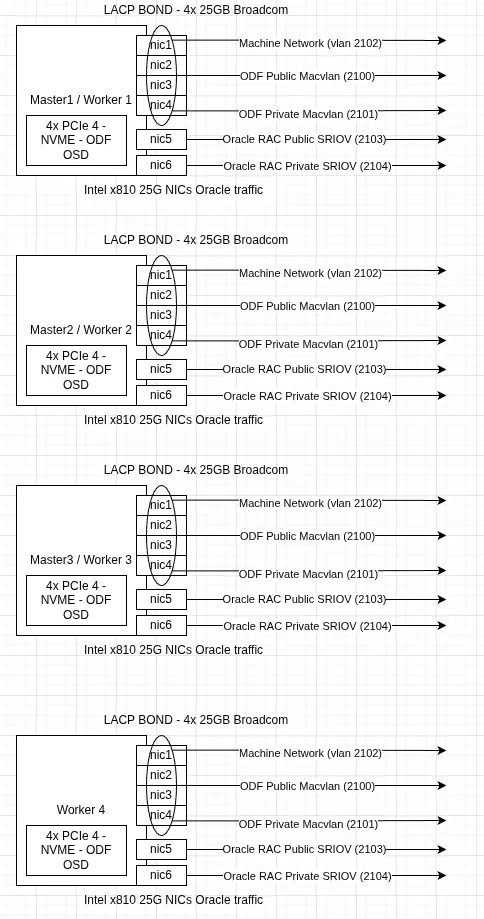

- 4 x 25Gbps Broadcom NIC

- 2 x 25Gbps Intel 810 NIC

OpenShift Virtualization configuration

While the default configuration for OpenShift Virtualization and OpenShift Data Foundation storage provides reasonable performance, further configuration changes have been made to optimize the test platform for IO-intensive workload typical for databases:

- Configured OpenShift Data Foundation to use a performance profile.

- Configured OpenShift Data Foundation and OpenShift Virtualization to separate out OpenShift Data Foundation storage traffic from general Software Defined Network (OpenShift Container Platform SDN) traffic. (Chapter 8. Network requirements | Planning your deployment | Red Hat OpenShift Data Foundation | 4.18)

- Segregated traffic for virtual machines (Oracle Database and HammerDB test harness) from OpenShift Data Foundation storage and OpenShift Container Platform SDN using separate physical network interfaces. To reduce latency and increase throughput, network interfaces introduced to affected virtual machines using Single Root I/O Virtualization (SR-IOV) (Figure 2).

Cluster specification:

- OpenShift version: 4.18.9

- OpenShift Virtualization: Enabled via OperatorHub

- Nodes:

- 3 x Hybrid (Control Plane/ Worker/ Storage) nodes

- 1 x Worker node

- Networking (specific to Oracle Database VMs):

- LACP bond with 4 Broadcom 25Gbps NICs partitioned to segregate OpenShift SDN, OpenShift Data Foundation storage client, OpenShift Data Foundation storage replication traffic.

- Two Intel x810 25GB NICs for virtual machine traffic with two different subnets (Public and Private) configured to be presented to virtual machines using SR-IOV.

- Storage (specific to Oracle Database VMs): OpenShift Data Foundation storage (backed by 4x 1.5 TB NVMe drives) configured with performance profile and using separate storage network.

Oracle Database configuration

The virtual machine to host the Oracle Database is moderately sized to avoid overcommitment of resources and to compare results of testing on different hardware options. The Oracle Database has not been specifically tuned for the Transaction Processing Performance Council Benchmark C (TPC-C) test and largely uses a default configuration with exception of the few common tuning changes based on best practices.

We selected tuning parameters based on the size of the virtual machine, specifics of the benchmark test workload, and monitoring information. We assessed the effectiveness of each change by comparing test results with baseline numbers. Oracle Database configuration could be further optimized following recommendations from Database Performance Tuning Guide.

Figure 3 shows that the Oracle Database and HammerDB client access were on the same network. Data volumes for virtual machines are configured to preallocate disk space to improve write operations.

We performed separate ad-hoc tests to assess the impact of storage on the performance of the database by adding NVMe storage using a local storage operator.

Virtual machine specification:

- OS: RHEL 8.10

- VM Count: 1

- vCPU: 16

- Memory: 48GB

- Storage: 250GB (Root and DB Data residing on the same volume) as block device from RH ODF

- DataVolume: Created using “preallocation: true” (thick provisioning).

- Networking: Connected to public subnet using SR-IOV.

Oracle Database single instance setup:

Oracle Database version: 19c Enterprise Edition with Release Update 26 (version 19.26)

- The database set up with a filesystem as the target for data files (root volume with OpenShift Data Foundation backed storage) using OMF (Oracle Managed Files).

- To ensure compatibility of the test with future versions of Oracle Database, the database has been created using the Container Database (CDB) architecture.

- Memory allocation used totalMemory of 32GB as input for the DB creation wizard (allowing Oracle Database installation to automatically assess SGA/PGA split).

- Additional tuning parameters:

- 4 Data files manually extended to 32GB

- REDO log size adjusted to 4GB

- 4 REDO log disk groups

- FILESYSTEMIO_OPTIONS: SETALL (allowing asynchronous IO and direct IO)

- USE_LARGE_PAGES: AUTO (to optimize CPU usage for large SGA size)

Note: For the performance tests with NVME backed storage, a separate filesystem has been mounted using NVME device and assigned as target destination for datafiles.

Observability and monitoring

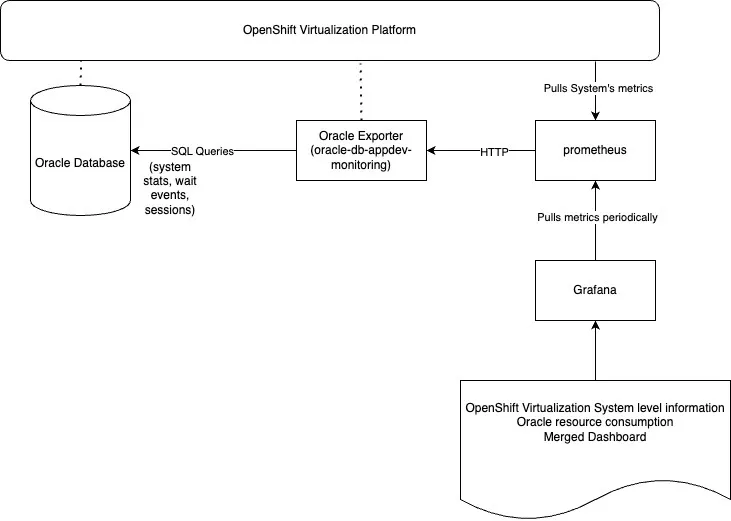

OpenShift offers a powerful, integrated observability platform that consolidates monitoring across both infrastructure and application layers. It natively supports metrics collection, logging, and alerting, and can be extended to include observability data from external applications like Oracle Databases. This unified approach reduces operational complexity while enabling end-to-end visibility.

Observability for OpenShift Virtualization is seamlessly integrated into the same platform, allowing you to monitor virtual machines, system resources, and workloads (i.e., Oracle Databases within a single, consistent monitoring stack).

The Oracle Database Observability Exporter, deployed within OpenShift, collects Oracle Database performance metrics and metadata, which are exposed to Prometheus. Grafana visualizes these metrics, providing real-time dashboards to detect abnormal patterns, resource pressure, and performance issues across Oracle Database and VM layers.

To enhance database-level analysis, you can leverage HammerDB during performance testing to capture snapshots and generate AWR (Automatic Workload Repository) reports. When combined with metrics from Prometheus and Grafana, these reports provide a richer, multidimensional understanding of workload behavior and potential bottlenecks.

Additionally, Oracle Database Enterprise Manager serves as a complementary tool, offering detailed diagnostics and specialized monitoring capabilities tailored to Oracle Databases. Used alongside OpenShift’s unified observability platform, it ensures comprehensive coverage for infrastructure and Oracle Database specific operational insights.

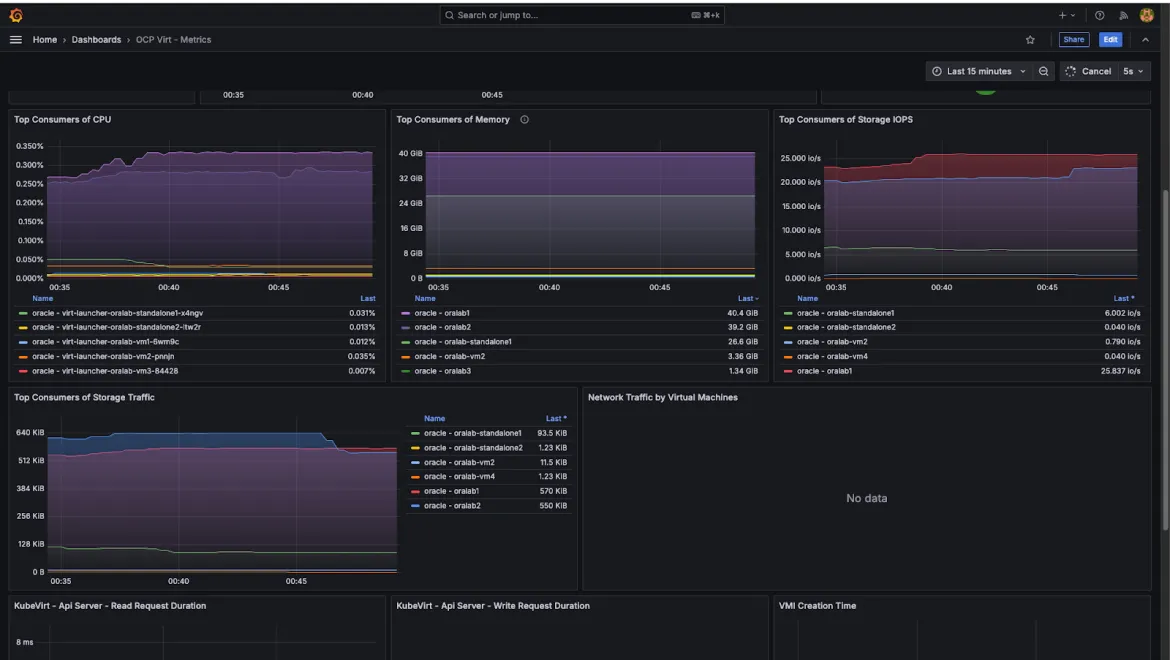

Figure 5 shows a sample Grafana dashboard deployed as part of the observability and monitoring setup for the OpenShift Virtualization platform.

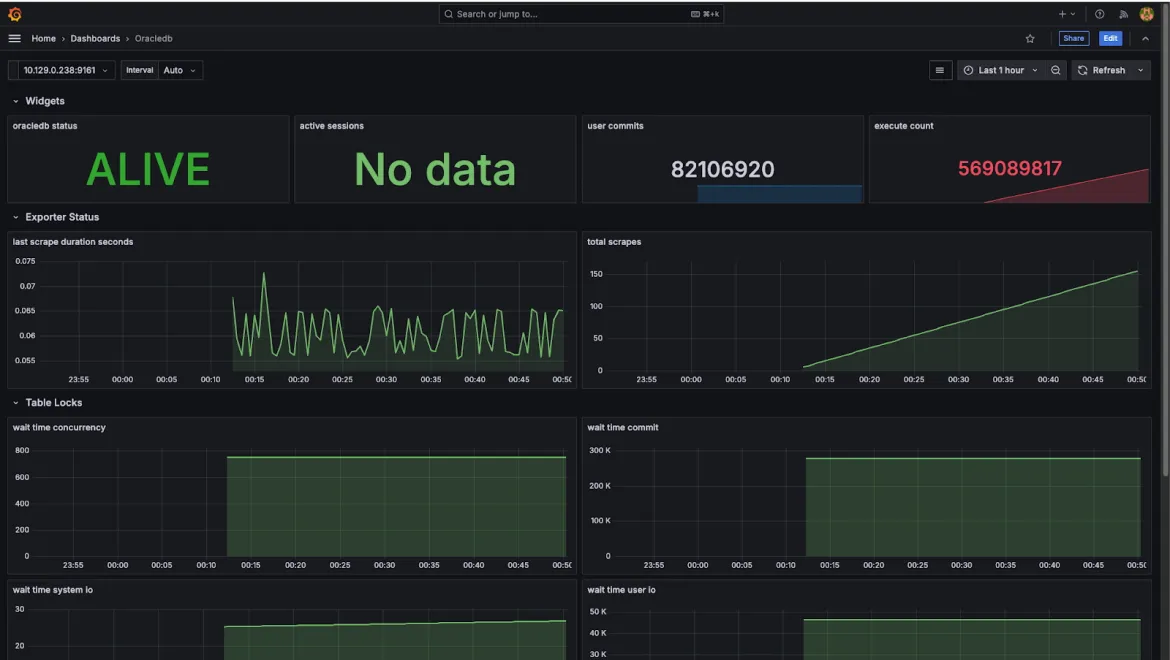

Figure 6 shows a sample Oracle Database Grafana dashboard deployed on the OpenShift Virtualization platform.

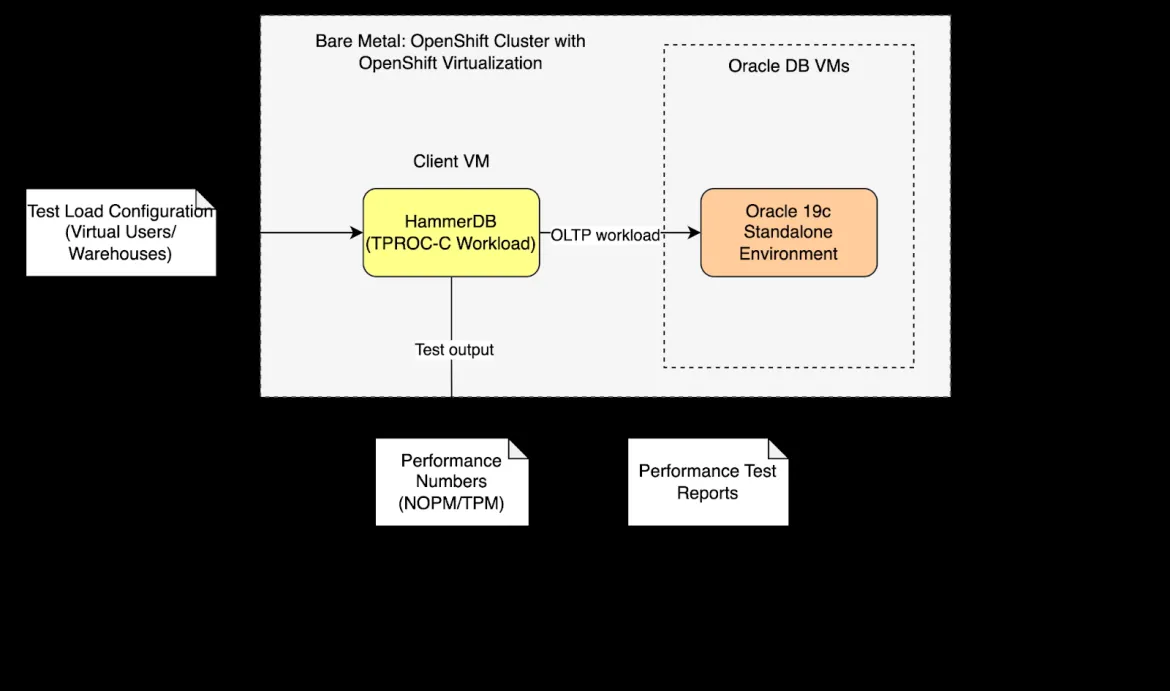

System performance evaluation

The performance test was designed to measure database transaction throughput and query latency for OLTP (Online Transaction Processing) workloads. We used HammerDB, open source database performance testing software to simulate OLTP workloads using the TPC-C benchmark against the single-instance Oracle Database with the previously mentioned system details. The TPC-C test simulates a real-world order management system, with a mix of 80% write operations and 20% read operations, including high-frequency customer orders, payments, inventory checks, and batch deliveries. The test execution involves HammerDB generating TPC-C workloads on Oracle Database within OpenShift Virtualization.

Test coverage summary

With HammerDB test harness, the scale-run profile was configured to simulate meaningful workloads with virtual user counts starting at 20 and scaling up to 100, using 500 warehouses with each test run for 20 minutes. We designed this setup to reflect realistic production scenarios and to evaluate the system’s performance under scaled transactional loads.

Based on the reference architecture configuration, the test results showed strong New Orders Per Minute (NOPM) and Transactions Per Minute (TPM) metrics for the single-instance Oracle Database with OpenShift Data Foundation storage. However, the single-instance Oracle Database with local NVMe storage delivered better performance compared to the OpenShift Data Foundation setup. While the average latency remained relatively stable, we observed occasional spikes.

Final thoughts

OpenShift Virtualization is a feasible and a viable platform to deploy Oracle Database 19c workloads. The ease of setting up OpenShift Virtualization offers robust support for creating virtual machines. Considering these factors, OpenShift Virtualization stands as a serious contender and alternative to competing virtualization technologies offerings. The current performance validation of Oracle Database 19c demonstrates enterprise grade performance on OpenShift Virtualization platform.

Through ad-hoc testing with local NVMe storage, we assessed the impact of high-performance storage options and found strong indications that upgrading to high-performance storage solutions like FC SAN may significantly improve overall performance.

For high-performance workloads, consider:

- High-performance storage options such as FC SAN for Oracle Database data files and redo logs to optimize performance.

- Segment network for virtual machines, OpenShift SDN and storage network preferably using separate physical devices on OpenShift Virtualization nodes.

- SR-IOV (Single Root I/O Virtualization), if supported by hardware to optimize performance of virtual network interfaces of virtual machines hosting Oracle Database workload.

- HugePages with the Oracle Database setting

USE_LARGE_PAGESbased on your workload requirements: This configuration adjusts the memory page size, recommended for improved performance, especially when working with SGAs larger than the default settings.

You can find HammerDB test scripts in this GitHub repository.

The oracle-db-appdev-monitoring GitHub project aims to provide observability for the Oracle Database so users can understand performance and diagnose issues easily across applications and databases. Read the instructions to set up the project on the OpenShift platform.

À propos des auteurs

Parcourir par canal

Automatisation

Les dernières nouveautés en matière d'automatisation informatique pour les technologies, les équipes et les environnements

Intelligence artificielle

Actualité sur les plateformes qui permettent aux clients d'exécuter des charges de travail d'IA sur tout type d'environnement

Cloud hybride ouvert

Découvrez comment créer un avenir flexible grâce au cloud hybride

Sécurité

Les dernières actualités sur la façon dont nous réduisons les risques dans tous les environnements et technologies

Edge computing

Actualité sur les plateformes qui simplifient les opérations en périphérie

Infrastructure

Les dernières nouveautés sur la plateforme Linux d'entreprise leader au monde

Applications

À l’intérieur de nos solutions aux défis d’application les plus difficiles

Virtualisation

L'avenir de la virtualisation d'entreprise pour vos charges de travail sur site ou sur le cloud