In enterprise Kubernetes environments, security risks often arise from overlapping administrative access. Platform engineers, infrastructure operators and developers may all touch sensitive resources, like secrets. This creates opportunities for privilege misuse or data exposure. By separating admin duties using Confidential Containers, organizations can prevent insider threads, simplify compliance, and align with zero-trust principles.

Kubernetes role-based access control (RBAC) enforces access policies by defining roles and permissions for users, groups, and service accounts. It allows you to limit a user's available actions, giving team members and applications access only to the resources they need. For example, RBAC can help limit a given developer to only deploy applications within specific namespaces, or an infrastructure team to have view-only access for monitoring tasks.

Can access | Can't access | |

Infrastructure admin | Physical hosts / hypervisors Networks Storage layer | Kubernetes control plane and configs Workloads Secrets |

Cluster admin | Kubernetes API and control plane RBAC roles, namespaces, network policies, scheduling Workloads secrets Unencrypted memory of workloads | Host OS Hypervisors |

Workload admin | Deploy applications Namespaces, deployments, pods Environment variables secrets | Node settings Network policies Cluster-wide configs |

However, Kubernetes RBAC has limitations. While it provides fine-grained access control for cluster resources, it does not natively separate infrastructure administration from workload security. The most privileged role, cluster admin, has complete control over all resources, including all secrets made available to the workloads (pods). This level of control creates a challenge for security-conscious organisations that want to prevent Kubernetes administrators from having access to all workload secrets.

What's the difference between Confidential Containers and Confidential Clusters?

Confidential Containers (CoCo) enables a new governance model, where secrets can be delivered securely to workloads inside a Trusted Execution Environment (TEE), bypassing the Kubernetes cluster (and admin) itself. This capability can redefine administrative roles, and segregate cluster and workload admins in Kubernetes.

Confidential Clusters focuses on isolating the Kubernetes cluster (control plane and worker nodes) from the infrastructure layer. In a traditional setup, the infrastructure admin (often the cloud provider or platform operations team) may be able to access the host operating system, storage, and potentially the Kubernetes cluster nodes itself. Confidential Clusters prevent infrastructure admins from accessing or tampering with the control plane, thereby enforcing a strict separation between infrastructure and cluster administration.

While CoCo separates cluster and infrastructure and workload admins, Confidential Clusters separate infrastructure and cluster admins. When used together, they enable a strong governance model that segregates infrastructure, cluster, and workload admins, each with tightly scoped responsibilities and no overlapping privileges.

The problem of too much power in one role

In a default Kubernetes deployment, a cluster administrator can access all workloads, configurations, and secrets. This means that even if an application owner wants to protect sensitive secrets, there is no way to fully shield them from a Kubernetes admin. This setup poses challenges for security-conscious enterprises, regulated industries, and multi-tenant environments.

How Kubernetes secrets are made available to pods

Kubernetes provides built-in secrets management, but its implementation exposes secrets to administrators and anyone with admin access to the Kubernetes cluster nodes. Kubernetes mounts secrets into workloads as:

- Volumes: Applications can retrieve secrets from volumes mounted as files within the pod. A cluster admin with access to the worker node's filesystem can also read these secrets.

- Environment variables: Applications can retrieve secrets through environment variables, but this method exposes secrets to any admin who can inspect running processes or environment variables inside a pod.

Because Kubernetes secrets are stored in etcd (unless an external secrets manager is used), anyone with access to etcd can retrieve them. This further increases the attack surface.

A New Model: Splitting administrative responsibilities

By combining Confidential Containers (CoCo) and Confidential Clusters, you can introduce a three-way split-administration model that segregates infrastructure, cluster, and workload responsibilities. This governance model allows each group to operate independently within tightly scoped boundaries, significantly reducing the risk of privilege escalation and data exposure.

Infrastructure Admins:

- Operate and manage the underlying infrastructure (hosts, hypervisors, physical machines, and networks)

- In Confidential Cluster setups, infrastructure admins cannot access cluster-level data or workloads, including control plane configurations or secrets

Cluster Admins:

- Manage the Kubernetes control plane and cluster-wide resources such as nodes, networking policies, RBAC roles, namespaces, and scheduling

- Have complete visibility and control within the cluster but cannot access plaintext secrets or tamper with workloads running inside a TEE

- Do not have access to the underlying host infrastructure, thanks to the protections enforced by the Confidential Cluster environment

Workload Admins:

- Own and manage specific workloads and the secrets needed to run them

- Use Confidential Containers and the associated Trustee Key Broker Service (KBS) to securely provision secrets

- Secrets are sealed and can only be unsealed inside the TEE by the workload, never exposed to the cluster or infrastructure layers

- Workload admins are mapped to specific Trustee servers, ensuring strict boundaries and access control

This model can technically be used without an actual TEE, but it comes with the risk of a cluster or infra admin obtaining a memory dump on the worker node and reading the secrets.

Can access | Can't access | |

Infrastructure admin | Physical hosts / hypervisors Networks Storage layer | Kubernetes control and configs Workloads Node memory in cleartext Sealed secrets inside CoCo TEE |

Cluster admin | Kubernetes API and control plane RBAC roles, namespaces, network policies, scheduling | Host OS Hypervisors Sealed workload secrets inside CoCo TEE Node memory in cleartext |

Workload admin | Only specific workloads running in TEEs Sealed secrets via Trustee KBS Namespace-scoped resources | Node settings Network policies Cluster-wide configs |

How it works in practice

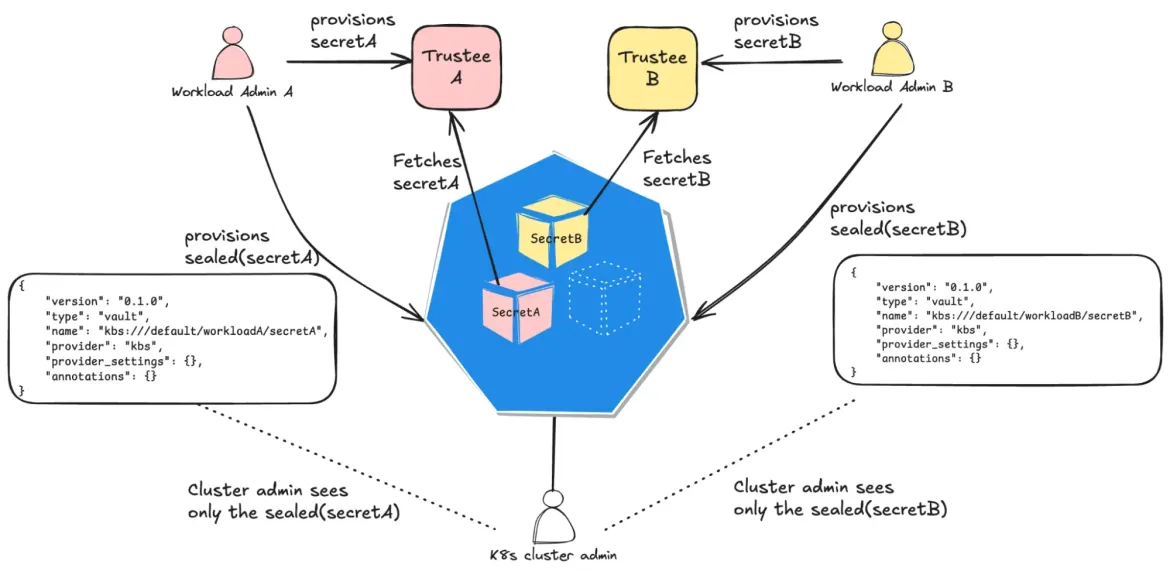

Here's an example CoCo implementation, and how cluster and workload admins are segregated:

- Workload admins provision secrets through a specific Trustee (hosted externally from the Kubernetes cluster running the workload, and managed by the workload admins) and then creates a reference to these secrets in the Kubernetes cluster. CoCo provides a sealed secret functionality for this purpose. The plaintext secrets are available only inside the CoCo pod. Read Exploring the OpenShift confidential containers solution for further detail on CoCo technology.

- Even a privileged Kubernetes cluster admin cannot retrieve the plaintext secrets, reducing insider risk and exposure.

- This ensures that an organisation can implement a least privilege access model for secrets management, while keeping Kubernetes infrastructure operational.

Imagine a financial services team deploying a sensitive trading app. The cluster admin sets up networking and policies but cannot access the workload secrets. Using the sealed secrets feature of Confidential Containers, secrets are only provisioned by the workload admin using the Trustee service. Even if the cluster is compromised, the secrets remain protected inside the TEE.

Business outcomes: Risk reduction and compliance

For decision makers, this model brings tangible benefits:

- Regulatory compliance: Ensures compliance with industry regulations (GDPR, PCI-DSS, HIPAA, and so on) by limiting privileged access to sensitive data

- Enhanced security posture: Reduces the attack surface by preventing unauthorised access to application secrets

- Seamless multi-tenancy: Supports environments where multiple teams within an organisation share the same Kubernetes cluster, without compromising security

- Zero-trust architecture: Aligns with modern security principles by enforcing strict boundaries between infrastructure and application security

Reduce risk with confidential computing

Confidential computing is more than just a security enhancement. It enables a fundamental shift in Kubernetes administration. You can reduce risk, enforce governance, and build a more secure cloud-native environment for your organization by separating cluster and workload administration.

For the enterprise, this governance model provides a robust way to embrace Kubernetes while maintaining complete control over sensitive data.

Want to implement this in your environment? Learn how Red Hat and confidentialcontainers.org secure Kubernetes. Request a demo or read our blog series.

product trial

Red Hat Learning Subscription | Teste de solução

Sobre os autores

Pradipta is working in the area of confidential containers to enhance the privacy and security of container workloads running in the public cloud. He is one of the project maintainers of the CNCF confidential containers project.

Jens Freimann is a Software Engineering Manager at Red Hat with a focus on OpenShift sandboxed containers and Confidential Containers. He has been with Red Hat for more than six years, during which he has made contributions to low-level virtualization features in QEMU, KVM and virtio(-net). Freimann is passionate about Confidential Computing and has a keen interest in helping organizations implement the technology. Freimann has over 15 years of experience in the tech industry and has held various technical roles throughout his career.

Master of Business Administration at Christian-Albrechts university, started at insurance IT, then IBM as Technical Sales and IT Architect. Moved to Red Hat 7 years ago into a Chief Architect role. Now working as Chief Architect in the CTO Organization focusing on FSI, regulatory requirements and Confidential Computing.

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Virtualização

O futuro da virtualização empresarial para suas cargas de trabalho on-premise ou na nuvem