-

Products & documentation Red Hat AI

A platform of products and services for the development and deployment of AI across the hybrid cloud.

Red Hat AI Inference Server

Optimize model performance with vLLM for fast and cost-effective inference at scale.

Red Hat Enterprise Linux AI

Develop, test, and run generative AI models to power enterprise applications.

Red Hat OpenShift AI

Build and deploy AI-enabled applications and models at scale across hybrid environments.

Cloud services

Red Hat AI InstructLab on IBM Cloud

A scalable, cost-effective solution to customize AI models in the cloud.

-

Learn -

AI partners

Agentic AI with Red Hat AI

Agentic AI describes software systems that are designed to problem solve and carry out complex tasks with limited supervision.

Agentic AI uses large language models (LLMs) and builds on the power of generative AI (gen AI). It works by connecting to and communicating with external tools to perceive, decide, and orchestrate a whole automated task to achieve a defined goal.

In an enterprise setting, agentic AI can complete complex and tedious workflows in a fraction of the time, helping to fuel productivity and improve efficiency. Simplify and accelerate your journey to successful agentic AI adoption with help from Red Hat® AI.

How can agentic AI help your organization?

Agentic AI augments traditional workflows by acting as an intelligent orchestrator of the tools you already use. It works and operates on top of your existing digital infrastructure.

With the “agency” to communicate and collaborate with internal and external data sources, it creates a sense of perception and context. This allows it to proactively anticipate needs, adapt to changes, and even reflect on its own work.

Transform customer data with an AI agent

Why Red Hat AI?

Red Hat AI provides a flexible approach and stable foundation for building, managing and deploying agentic AI workflows within existing applications.

Simplified agent workflow assembly

Red Hat AI offers capabilities that make it easier to build new agents and modernize existing ones. This includes access to Llama Stack, which offers an out-of-the-box agent framework and a unified API layer for various functionalities, including safety, evaluations, and post-training. This streamlines the integration of LLMs, AI agents, and retrieval-augmented generation (RAG) components, which simplifies the creation of agentic workflows.

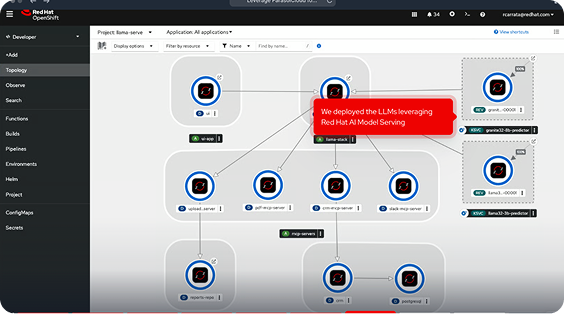

Adaptable and governed agent deployment

Benefit from a safeguarded yet adaptable platform that supports diverse agent frameworks and both agent-to-agent and agent-to-tool connectors. Use the Model Context Protocol (MCP) to connect to tools or as an abstraction layer for connecting agents via Llama Stack. With integrated monitoring and governance capabilities—built on decades of container security experience—you gain visibility into system activity and agent behavior.

Scalable and cost-optimized agent infrastructure

Deploy and manage agentic AI applications consistently across hybrid cloud environments with flexible hardware accelerator options and intelligent resource management. Red Hat OpenShift® AI, which includes Red Hat AI Inference Server, optimizes the power of AI agents in a production environment. This allows for more efficient scaling and drives down traditionally high costs.

Stay flexible

Red Hat AI provides users with the flexibility to choose where to train, tune, deploy, and run models and AI applications–on premise, in the public cloud, at the edge, or even in a disconnected environment. By managing your AI models within your environment of choice, you can control access, automate compliance monitoring, and enhance data security

Red Hat AI

Tune small models with enterprise-relevant data, and develop and deploy AI solutions across hybrid cloud environments.

Your vendors are your choice

We work with software and hardware vendors and open source communities to offer a holistic AI solution.

Access partner products and services that are tested, supported, and certified to perform with our technologies.