Kubernetes is the foundation of the cloud-native approach to application delivery. However, managing the entire lifecycle of applications spanning across build, security, delivery, observability and operations requires more than just Kubernetes. But what if you could have more than just container orchestration? Imagine a platform that goes beyond just orchestration, delivering a comprehensive application platform designed to manage the entire lifecycle of your applications based on a decade of open source innovations within the Cloud Native Computing Foundation (CNCF). That's the vision behind Red Hat OpenShift as an application platform, a complete solution for building and running modern cloud-native applications and even virtual machines (VMs), across on-premise, public cloud, bare metal and even edge infrastructure.

By integrating and automating operations and lifecycle management of Kubernetes and the CNCF technologies, improving resource efficiency and enabling scalable application management, Red Hat OpenShift helps organizations realize faster time to market, stronger security postures and greater operational efficiency. Whether you're modernizing legacy applications, building new cloud-native services or managing applications at the edge, Red Hat OpenShift provides the tools and foundation to help you succeed.

Building on this foundation, Red Hat OpenShift introduces ambient mode as technology preview in Red Hat OpenShift Service Mesh 3, a transformative simplification of service mesh control plane without sidecars. Furthermore, it delivers a multicluster GitOps control plane through the new Argo CD agent in Red Hat OpenShift GitOps, delivering a scalable and security-focused model for managing applications and Kubernetes configurations for the entire cluster fleet.

Simplifying OpenShift Service Mesh with ambient mode

Service mesh has become a cornerstone of cloud-native application architectures, helping provide security capabilities and manage communication between microservices applications. OpenShift Service Mesh 3.0 was recently released with focus on upstream Istio alignment and multicluster scalability. Istio's multicluster topologies enable a single service mesh to span multiple OpenShift clusters, providing high availability and a unified view. This approach is ideal for deployments across clusters, availability zones or regions managed by a single administrative group.

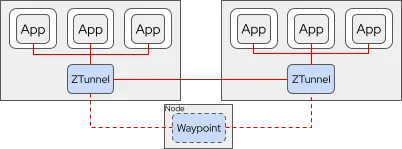

Istio takes advantage of sidecars, a programmable proxy deployed automatically alongside application containers, to enable security capabilities, observability and traffic management for applications without requiring any code changes in the application. While Istio’s sidecar-based approach is helpful in enabling a rich set of capabilities for securing, managing and observing application networks without code changes, these capabilities come with the added overhead of running sidecar proxy alongside each application pod. In some use cases, the sidecar overhead is not acceptable for particular applications, and organizations are forced to use traditional network operations instead. In addition to the sidecar approach, the upcoming release of OpenShift Service Mesh delivers a re-architected experience based on Istio ambient mode, available as a technology preview feature, which helps to address these use cases. This new mode eliminates the need for sidecar proxies by decoupling networking logic from application pods. Instead, ambient mode uses node-level proxies and a security-enhanced overlay layer to handle mutual TLS encryption and introduces proxies outside of the application workloads to manage traffic at L4 and, optionally, L7. The per node zero trust proxy (ztunnel) manages pod-to-pod encryption with cryptographic identities and enables simple authentication and observability. The full set of Istio capabilities can be enabled in an ambient service mesh through additional L7 proxies (called waypoint) deployed in the application namespace, which provides traffic routing, advanced authorization policies and comprehensive observability. Waypoint proxies are regular pods that can be configured to autoscale horizontally and adapt to the real traffic demand for the application they serve, hence increasing scalability while reducing resource consumption.

This architectural shift brings improvements for both developers and operations teams, with developers no longer needing to adapt their applications to mesh-specific sidecars or configurations, while platform teams benefit from simpler upgrades, reduced resource consumption and lower operational overhead. Because fewer proxies are deployed, clusters can accommodate more applications using fewer compute resources.

Read more about how to try ambient mode with OpenShift Service Mesh.

Scaling GitOps with Argo CD multicluster control plane

As Kubernetes adoption grows, organizations are increasingly managing fleets of clusters across on-premise datacenters, public clouds and edge environments. GitOps practices—where desired state is stored in Git and automatically synchronized to Kubernetes clusters—have emerged as a common practice for managing this complexity, while maintaining consistency and visibility into the changes rolled out across clusters. However, traditional GitOps architectures often struggle to scale when hundreds or thousands of clusters must be managed securely and efficiently despite various networking conditions and communication constraints.

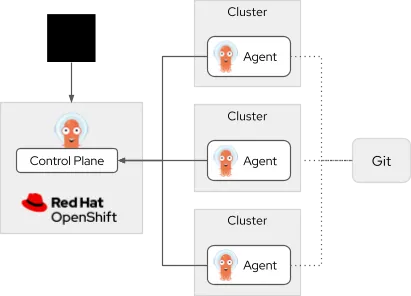

To address the GitOps scalability, security and operations challenges in fleet scenarios, Red Hat introduced a new argent-based Argo CD architecture in the Argo community. This new architecture reimagines GitOps at scale by deploying lightweight agents directly into each managed cluster. These agents pull desired state configurations from a centralized Argo CD control plane, applying updates locally without requiring the central server to maintain direct control over each cluster.

Diagram of the new Argo CD agent model. A git repository on the right has dotted lines connecting to three clusters in the center. Each cluster has an Argo agent inside and connects with solid lines to the control plane cluster running OpenShift on the left. A person icon has a solid arrow pointing to the control plane cluster.

This decentralized model improves scalability, reduces resource consumption, enhances security by limiting privileges at the cluster level and increases resilience by allowing agents to continue applying changes even during intermittent connectivity issues. The Argo CD multicluster control plane and agents is now available as a technology preview in Red Hat OpenShift GitOps and is compatible with existing Argo CD workloads.

With Argo CD Agent, OpenShift provides a modern GitOps experience designed to meet the demands of distributed environments, supporting the next generation of hybrid cloud architectures.

And there is more

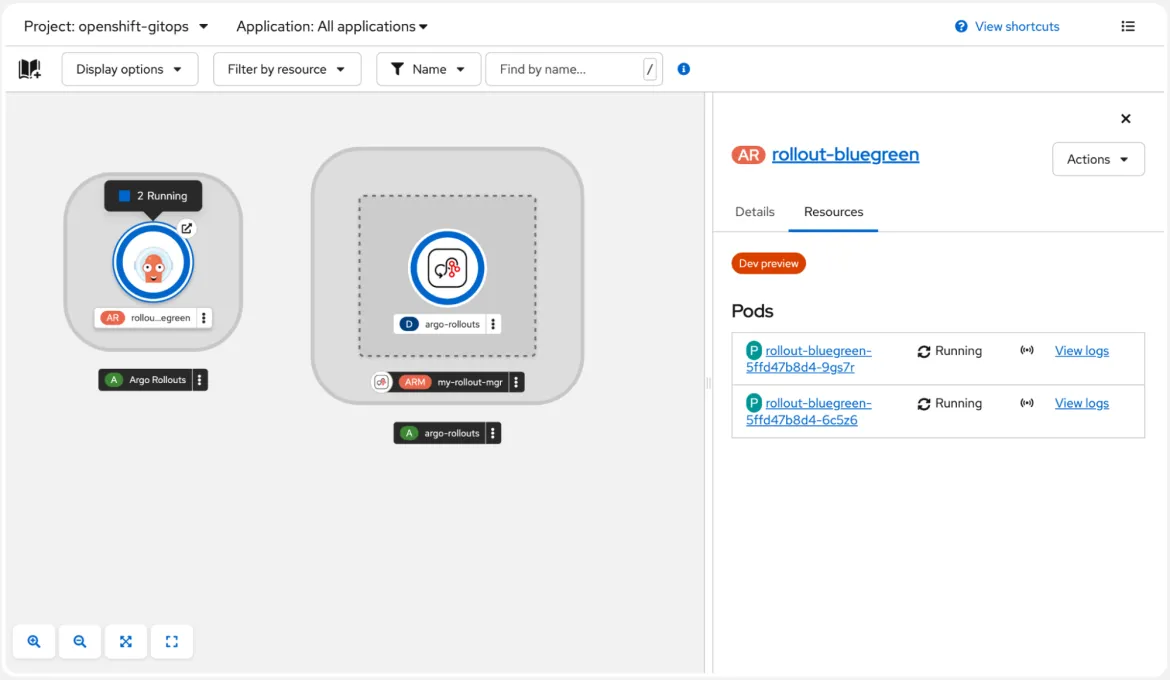

OpenShift GitOps provides Argo Rollouts for progressive delivery and advanced deployment scenarios such as canary releases with automated analysis and rollbacks. Rollouts visualization is now available within Argo CD dashboard out-of-the-box and is integrated with the OpenShift web console topology view.

This screenshot shows OpenShift console’s new Argo Rollouts page. A topology view on the left shows a blue/green rollout running. A model on the right shows the details and resources of the rollout, listing two pods both running.

With rapid adoption of GitOps workflows, the role of external secret managers have become increasingly more critical to prevent storing and exposure of sensitive data through Git repositories. OpenShift includes the Secret Store CSI Driver to facilitate integration with common secret managers. We are now introducing External Secrets as a Tech Preview feature to enable compatibility and GitOps workflows with a wider range of workloads and use-cases.

Furthermore, Red Hat OpenShift Pipelines, the Kubernetes-native security-focused pipelines based on Tekton, brings StepActions as GA capability to enable teams to share and distribute CI/CD blocks across Tekton Tasks and facilitate reuse and standardization across organisations. It also introduced a new enhanced pruner to enable flexible pipeline pruning policies based on time-to-live, number of executions, successes and failures and other criteria and provide teams with granular control over the pruning process.

To learn more, visit:

product trial

Red Hat OpenShift Container Platform | Versión de prueba

Sobre el autor

Siamak Sadeghianfar is a member of the Hybrid Cloud product management team at Red Hat leading the cloud-native application build and delivery on OpenShift.

Más similar

Navegar por canal

Automatización

Las últimas novedades en la automatización de la TI para los equipos, la tecnología y los entornos

Inteligencia artificial

Descubra las actualizaciones en las plataformas que permiten a los clientes ejecutar cargas de trabajo de inteligecia artificial en cualquier lugar

Nube híbrida abierta

Vea como construimos un futuro flexible con la nube híbrida

Seguridad

Vea las últimas novedades sobre cómo reducimos los riesgos en entornos y tecnologías

Edge computing

Conozca las actualizaciones en las plataformas que simplifican las operaciones en el edge

Infraestructura

Vea las últimas novedades sobre la plataforma Linux empresarial líder en el mundo

Aplicaciones

Conozca nuestras soluciones para abordar los desafíos más complejos de las aplicaciones

Virtualización

El futuro de la virtualización empresarial para tus cargas de trabajo locales o en la nube